- Screen Scraping: How to Screen Scrape a Website with PHP and cURL. Screen scraping has been around on the internet since people could code on it, and there are dozens of resources out there to figure out how to do it (google php screen scrape to see what I mean). I want to touch on some things that I've figured out while scraping some screens.

- Lastly, as libcurl works very well with php, many web applications use it for web scraping projects, making it a must-have for any web scraper. You can learn more on web scraping using Selenium and some other useful libraries like Beautiful Soup or lxml tutorial in our blog.

- Web scraping with Python; Basic example of using requests and lxml to scrape some data; Maintaining web-scraping session with requests; Modify Scrapy user agent; Scraping using BeautifulSoup4; Scraping using Selenium WebDriver; Scraping using the Scrapy framework; Scraping with curl; Simple web content download with urllib.request.

- CURL and web scraping are powerful tools that can be used to automate what would otherwise be somewhat soul-crushing repetitive tasks. They are also sometimes used for more nefarious purposes, like copying entire blog posts and articles from one site and placing them on another.

Screen scraping has been around on the internet since people could code on it, and there are dozens of resources out there to figure out how to do it (google php screen scrape to see what I mean). I want to touch on some things that I've figured out while scraping some screens. I assume you have php running, and know your way around Windows.

Web scraping done right (with cUrl and user agent) - response.php.

- Do it on your local computer. If you are scraping a lot of data you are going to have to do it in an environment that doesn't have script time limits. The server that I use has a max execution time of 30 seconds, which just doesn't work if you are scraping a lot of data off of slow pages. The best thing to do is to run your script from the command line where there is no limit to how long a script can take to execute. This way, you're not hogging server resources if you are on a shared host, or your own server's resources if you are on a dedicated host. Obviously, if your screen scraping data to serve 'on-the-fly', then this senario won't work, but it's awesome for collecting data. Make sure you can run php from the command line by opening up a command prompt window, and type 'php -v'. You should get the version of php you are running. If you get an error message then you'll need to map your PATH environment variable to your php executable.

- Do it once. If you are writing a script that loops through all of the pages on a site, or a lot of pages - make sure your script works right before you execute it. If the host sees what you are doing and doesn't like it, then they could just block you. So it's best to make sure your script runs correctly by doing a small test run. Then when that works, unleash your script on the entire site. In that same vein, don't screen scrape a site all the time. You're just going to piss off the admin if they figure it out.

- Do it smart. Make sure the site doesn't offer an api for doing what you want before you scrape their site. Often, the api can get you the information quicker and in a better format than the screen scrape can.

- Use the cURL library. I really don't know any other way to scrape a page other than to use cURL -- it works so well I just never have had to try anything else. Since you are going to be using php from the command line, you're also going to want to use curl from the command line (it's easier than using the PHP functions, and external libraries are not loaded any way). Get the curl library from http://curl.haxx.se/download.html and download the non ssl version. Map the path to curl.exe in your PATH environment variable, and make sure you can run curl from the command line.

Those are all of my tips. Here is some screen scrape code that I use.

Web Scraper For Chrome

To call curl just write a function like this. This is so much easier than using the php commands, but you probably don't want to use a shell_exec command on a web server where someone can put in their own input. That might be bad. I only use this code when I run it locally.

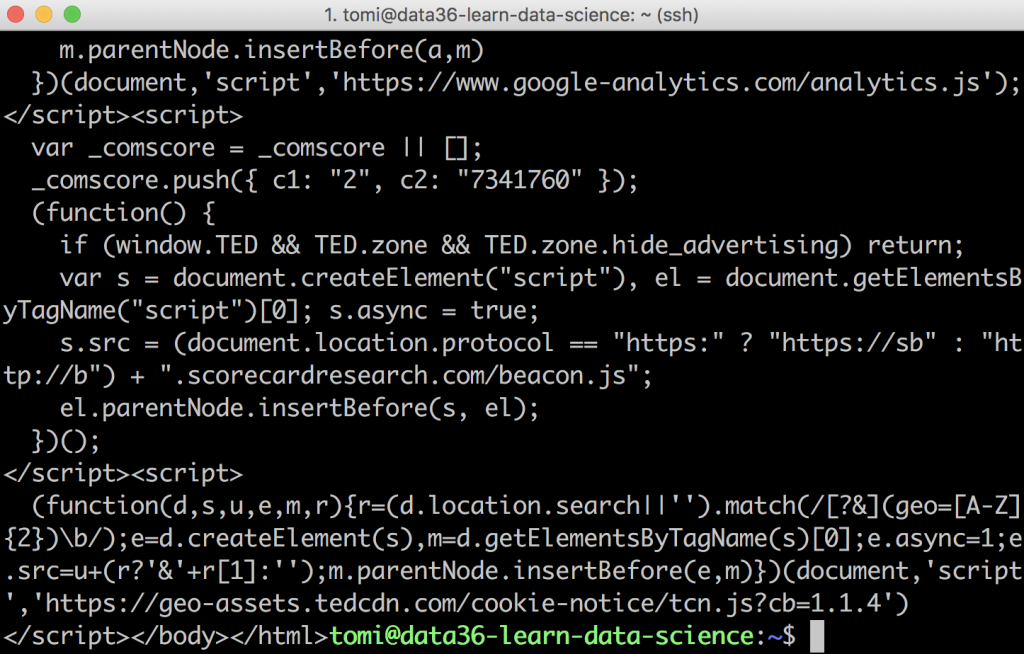

This is the code that calls the curl function. We start by using the output buffer, this greatly speeds up our code. This particular code would grab the title of a page and print it:

To run your script from the command line and generate output to a file you simply call it like this:

php my_script_name.php > output.txt

Any output captured by the output buffer will be printed to the file you pass the output to.

Lightweight Installation. The compact file size of AnyDesk means it is suitable for even the smallest of Raspberry Pi set-ups and the amount of storage space used upon installation is minimal. Downloading the app is quick and easy while installation takes just a few minutes. Anydesk raspberry pi install.

Curl Web Scraper

This is a very simple example that doesn't even check to see if the title exists on that page before it prints, but hopefully you can use your imagination to expand this into something that might grab all of the titles on an entire site. A common thing that I do is use a program like Xenu Link Sleuth to build my list of links I want to scrape, and then use a loop to go through and scrape every link on the list (in other words, use Xenu for your spider and your code to process the results). This was how I build the Shoemoney Blog Archive list. The challenge and fun with screen scraping is how can you use that data that is out there to your advantage.